By Jeff Williams, Co-Founder, Chief Technology Officer

August 17, 2016

For many application security vendors, "coverage" is the third rail — but it's a critical part of your application security strategy... maybe the most critical.

If you're a CISO, appsec program manager, or anyone else charged with creating an application security strategy, you should be thinking coverage (and cost) all the time.

If you're a CISO, appsec program manager, or anyone else charged with creating an application security strategy, you should be thinking coverage (and cost) all the time.

Seems simple, right? Of course to be sure your security verification efforts get good "coverage." Since the dawn of the OWASP Top Ten in 2003, vendors, consultants, managers, and CISOs have been reporting their appsec "coverage" in a disorganized, inaccurate, and often wildly optimistic way. But nobody in application security wants to touch this taboo topic. But what the hell, let's go there anyway...

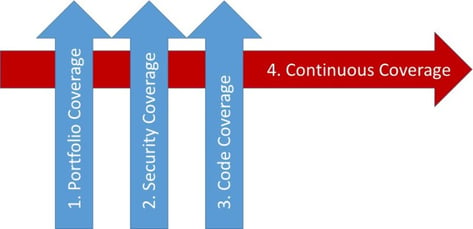

As we'll explore below, coverage isn't a single dimension. Pay special attention to the fourth dimension -- "Continuous Coverage." As software development accelerates and the threat gets increasingly persistent, making sure your security is continuously enforced and verified is critical.

Gaps in coverage are gaps in security. Technically, gaps in coverage are important (and almost universally ignored) risks. They're the "unknown unknowns." Unverified code could, and almost certainly does, contain vulnerabilities that might get found and exploited by an attacker. You'll never know how bad these "latent" vulnerabilities are — and you'll never know just how crazy of a risk you are taking.

Bottom line is that unless you measure, you will never know how much coverage you have -- or where your unknown risks are. So let's break down the different dimensions of application security coverage.

The first kind of coverage is pretty simple. What chunk of your application portfolio are you verifying? Many organizations only scan their "critical" applications despite increasing evidence that major breaches are initiated through lesser web applications and web services. One CISO I talked to (with a so-called "mature" appsec program) told me "90% of our application portfolio is one click away from the Wall Street Journal."

The first step is to understand what applications you have. This is easier said than done. Application portfolios change quickly, so you are not likely to be able to manage the job with surveys and Excel spreadsheets. Your "asset inventory" isn't likely to be complete or to capture the information you need. Don't forget:

Remember, there are multiple instances of each application across the entire lifecycle, including development, integration, QA, and production.

Automating your application inventory is absolutely worth it... critical even... particularly in DevOps organizations that are constantly spinning up new servers and containers to test applications. This is also a good time to gather a basic profile of each application, including the server used, libraries and frameworks (and versions), lines of code, and configuration details.

Recommendation: We've found that the best approach here is to enable your applications to inventory themselves (including libraries and components) and send that information to a central server continuously. This kind of instrumentation is not complex to set up and then you always have an up-to-date list of exactly what you're running. When new applications come online, they are automatically tracked. This works especially well if you add the instrumentation to your standard server build so it's a part of every application environment.

Security coverage is about verifying that your security defenses are all in place, correctly implemented, and used properly. Coverage here requires breadth and accuracy.

Breadth is about verifying the all the defenses each application actually needs. Every application is a beautiful and unique snowflake and has a custom security architecture. Let's save that topic for an article about threat modeling and security architecture. For now, let's just focus on the basics that almost every application needs... making sure you have safe communications, strong authentication, access control, injection defense, data encryption, etc...

How do you know you have the proper breadth? Does your tool vendor tell you that they cover a set of vulnerabilities? Do they cover *ALL* the different permutations of those vulnerabilities? Or just the one that's easy for their tool to detect. Did you test your tool to see what it's good and bad at? (If not, you are in for a surprise). If you use manual penetration testing or code review, do you know what they actually tested? The challenge is making sure that you are verifying the full breadth of controls you are using.

Accuracy focuses on the depth of the security analysis. Each type of vulnerability is best verified using different analysis techniques and different information. Some issues are found by analyzing HTTP, some in the code, some with runtime data flow, some in configuration, some in libraries, and some require multiple techniques. For a CSRF example, read my recent article about the information needed to analyze these vulnerabilities accurately. You should consider whether your tools even have the information necessary to accurately diagnose the vulnerabilities you care about.

Everyone in application security strategy knows how much time is wasted on false positives. They are irritating, security culture-killing noise that waste time and destroy value. However, false negatives (real vulnerabilities that go undiscovered) are even more insidious. Nobody knows they are there, so they create a false sense of security and leave you totally exposed.

Recommendation: We've found that the best approach is to use tools that have access to many different sources of information (code, HTTP, libraries, configuration, data flow, etc...) and use a combination of analysis techniques. Note: this is very different from trying to merge the results of multiple different single-dimensional tools. This "hybrid" approach has been attempted for over a decade and simply doesn't help. Post-analysis correlation is too difficult and therefore generates huge amounts of manual work triaging output from all the different tools. Focusing on the few vulnerabilities that actually do correlate can even de-emphasize exactly what a particular tool does uniquely well.

All security tools should address code coverage, because the number of theoretically possible code paths is staggeringly big. Like number of particles in the universe big. So understanding exactly what code is covered by a verification technique is critical.

Tools need to analyze the entire application, including the custom code, dynamic code, frameworks, libraries, application server, configuration files, and runtime platform libraries. Modern applications are assembled at runtime, with techniques like inversion of control, reflection, dynamic class loading, and dependency injection. So If you want security code coverage, you have to analyze the assembled and running application -- not a pile of source code.

If you’re using dynamic scanners (DAST), we encourage you to use a code coverage tool (like EclEmma, JaCoCo, etc...) to measure the actual coverage you are getting. We typically get 20-25% and it’s very difficult to increase that coverage. If you’re using static analysis (SAST), the analysis is just a black box -- you have no way to know what code was actually analyzed. Although it seems like static analysis should analyze every line of code, what matters is all the paths through that code. Static tools build a "model" of the application and traverse it from the entry points they know about -- losing flows that go through complex code, libraries, frameworks, reflection, IOC, deep stacks, etc... The result is poor code coverage.

Recommendation: We have found that directly instrumenting applications and analyzing them as they run is the most effective way to achieve code coverage. This approach observes the actual application as it was built, deployed, and operated. This approach starts with basic security analysis during code loading and (like static) every line of code (including libraries, frameworks, and application server) gets analyzed.

But the real power of instrumentation happens throughout the normal software development process. Any testing that you do for any reason (e.g. developer testing, unit tests, integration tests, Selenium, or QA tests) now does double-duty as security tests. The security instrumentation is working in the background, actively analyzing any code that runs. You don't have to attempt to exploit vulnerabilities to discover them, so even novice developers doing their normal work can verify security. And, of course, you can accurately measure your coverage with any code coverage tool.

Finally, there’s a hidden dimension which is fast becoming the most important for application teams to consider: time. Remember, in the current threat environment, a single glimpse into application security once a year is not nearly enough visibility. You have to get it right over time... all the time.

Ideally, you want continuous verification of your applications across the software development lifecycle. This is a fundamental problem with scanning approaches -- it's a lot of work (for experts) to run the scans and interpret the results. So the more you scan, the more people you need. Be careful here! Some vendors say "continuous" but they really mean they're scheduled to run on a specific repeatable schedule.

We have seen a lot of organizations attempting to do "DevSecOps" by running scanning tools on every code commit, after every build, or with every sprint. The problem is that the results just pile up and there aren't enough security experts to triage them. The result is frustrated development teams or, more often, a dashboard full of unaddressed vulnerabilities. Many respond by dealing with the security backlog at the end of the development process, but that's most expensive and least effective way to do application security.

Recommendation: We believe in using security instrumentation to continuously verify applications because it starts working when the developer writes and tests the first line of code. It's there when unit tests are run on a continuous integration server. And it's watching while any QA tests are run or Selenium scripts are executed. Even a simple crawler is now an extremely powerful security tool. Because alerts are provided to developers, in their environment, instantly after vulnerabilities are introduced, they enable teams to commit clean code the first time.

"Coverage" is a deceptively complex concept. I hope this discussion helps you build an application security program that allows you to understand and improve coverage, instead of just measuring the size of your pile of vulnerabilities.

AppSec Coverage Recommendations:

.jpg)

Jeff brings more than 20 years of security leadership experience as co-founder and Chief Technology Officer of Contrast Security. He recently authored the DZone DevSecOps, IAST, and RASP refcards and speaks frequently at conferences including JavaOne (Java Rockstar), BlackHat, QCon, RSA, OWASP, Velocity, and PivotalOne. Jeff is also a founder and major contributor to OWASP, where he served as Global Chairman for 9 years, and created the OWASP Top 10, OWASP Enterprise Security API, OWASP Application Security Verification Standard, XSS Prevention Cheat Sheet, and many more popular open source projects. Jeff has a BA from Virginia, an MA from George Mason, and a JD from Georgetown.

Get the latest content from Contrast directly to your mailbox. By subscribing, you will stay up to date with all the latest and greatest from Contrast.