May 22, 2020

Sensitive data often leaks out through applications. The privacy risk is not developer negligence, but rather misplaced trust in pre-General Data Protection Regulation (GDPR) solutions and infrastructure. Enterprises should turn to modern AppSec solutions with automated sensitive-data masking capabilities that can effectively scale.

With privacy legislation like the California Consumer Privacy Act (CCPA) and the European Union’s GDPR driving awareness of privacy protection, many data-driven enterprises have been surprised to learn which of their applications contain sensitive data and where that sensitive data goes and why. This is prompting them to reevaluate their data protection controls around employee and customer personal and private information. Customer sensitive data may leak along with any data leaving the network.

The result is that data security relies on how sensitive data from the application is managed. Questions organizations need to ask include:

It is important to remember that applications regularly decrypt customer data as part of their normal operation—true even of encrypted data—which means AppSec models may continuously produce data leaks.

AppSec teams often apply data encryption to areas marked sensitive and where data is at rest, such as a database. However, they can unknowingly permit applications to write vast amounts of that sensitive data into log files. Leaks of sensitive data through application logs can happen for many operational reasons, such as developer debugging or because of code logs method arguments.

While log files are a nice assist to performance and issue investigation, they are often in the unfortunate format of an unencrypted plain-text file. Thus, when applications log sensitive data, strong encryption protocols deployed elsewhere are rendered ineffective, resulting in data leakage and noncompliance with standard privacy regulations.

As an example, applications that accidentally log incoming passwords are vulnerable to significant privacy risk from sensitive data leaks. Even failed password-attempt logging exposes passwords. The outcome is that anyone who can view an unencrypted plain-text log file is free to see the password list for an application.

public static boolean checkLogin(String user, String password){

LOG.debug(“Attempting login for {} with password {}”, user, password);

//…

}

Most applications have myriad valid reasons to log that are absolutely acceptable. Log activity, however, if left unmanaged by security, can become a large source of unauthorized data breach events. Security teams and developers need to ask if their applications write sensitive data to unencrypted logs, and if so, where does that sensitive data ultimately go.

For example, an application may work with a large generic data structure called a Collection simply because it is faster. If the application stores sensitive customer data inside one of these in-memory structures, the sensitive data will definitively appear in an application log. This type of logging is common with utility classes and microservices, where the method author does not know what is arriving. With method chaining, an application developer may be unaware of all other methods that occur as a result of what the app does. The unfortunate result is that a seemingly innocuous method call or feature may accidentally leak sensitive data through a log.

void analyze(Map<String, String> mapData){

mapData.entrySet().forEach(entry -> LOG.debug(“Analyzing {}: {}”, entry.getKey(), entry.getValue());

//…

}

For obvious reasons, customers do not want their sensitive data left open or unprotected. Additionally, businesses require AppSec solutions to meet compliance assurances mandated by GDPR and CCPA regulations, including DevOps-native tools to accurately scrub agent logs, both on-premises and in the cloud. This is especially important for business-critical applications. Ultimately, it is up to AppSec teams to deploy compliant solutions.

One way to do so is to look at simple log statements, ensuring sensitive-data security compliance and protection.

For obvious reasons, customers do not want their sensitive data left open or unprotected. Additionally, businesses require AppSec solutions to meet compliance assurances mandated by GDPR and CCPA regulations, including DevOps-native tools to accurately scrub agent logs, both on-premises and in the cloud. This is especially important for business-critical applications. Ultimately, it is up to AppSec teams to deploy compliant solutions.

One way to do so is to look at simple log statements, ensuring sensitive-data security compliance and protection.

LOG.debug(“Attempting login for {} with password {}”, user, password);

Application logs would then produce standards-compliant sanitized log output.

Attempting login for username with password (redacted).

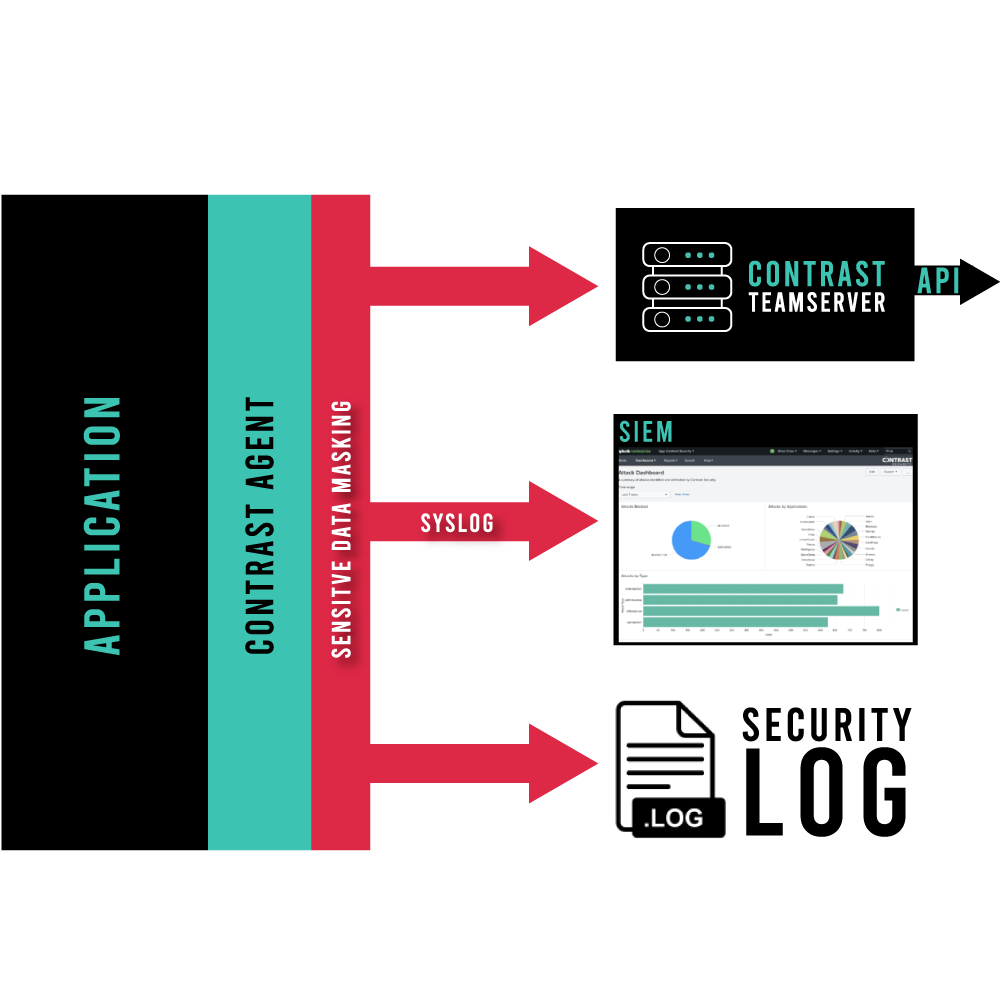

Sensitive data-masking operations should not be left to external AppSec defenses located outside of applications; those perimeter locations can only see and block data over the web while sensitive customer data typically leaks through a log. Contrast Security meets regulatory compliance and prevents sensitive data leaks by automatically monitoring logs and redacting any sensitive data. Here, the best data privacy compliance for AppSec tools is built-in log analysis and redaction actions (see Figure 1).

Figure 1. Sensitive data masking is performed inside a single application agent, and all downstream existing logs are masked and thus compliant with GDPR and CCPA.

Contrast performs repeatable and consistent sensitive data masking in logs by watching all arguments sent to a logging application programming interface (API). Sensitive data identification and masking is then automatically performed by the Contrast language-agent through matching keyword values within HTTP requests during input processing in the application.

All data Contrast sees is analyzed and all sensitive customer data is masked to remove any possibility of a sensitive data leak or loss of personally identifiable information (PII). Following are the different types of data that is masked:

Modern embedded security like Contrast uses automated runtime application self-protection (RASP) that works inside an application to give non-security staff, such as developers, the freedom to effortlessly set sensitive-data security policy out of the box without any specialized help. This enables organizations to scale their businesses effectively while reducing risk.

Instead of seeking additional tools or expertise, Contrast embedded agents continuously observe code metadata from inside application runtimes. This ensures that customer data remains protected no matter where it is located (on-premises or in the cloud. At the same time, Contrast continuously safeguards a business’s reputation with customers no matter their level of AppSec maturity.

Java developers deploying Contrast also have the added bonus of being able to normalize all the different logging APIs used by an application—and there are many, such as Log4j, JDK1.4 Logger, Logback, SLF4j, Log4j2, and Flogger, to name a few.

More enterprises are choosing out-of-the-box GDPR and CCPA compliance for their AppSec solution. When employed in concert with a modern security instrumentation approach, they have the ability to continuously observe and prevent sensitive customer data from leaking into log files. Developers can easily configure sensitive-data masking policy for their AppSec and enjoy secure and compliant logging without adding any security expertise, cost, or time. In doing so, they can ensure AppSec logging protection for all of their customer application data, including PII.

Risk management is a growing concern and focus for many organizations. Expanded DevOps processes and development cycles increase risk, which is exacerbated with new data privacy mandates such as GDPR and CCPA. Yet, with the right AppSec logging management and redaction in place, security teams can minimize the risk of exposing sensitive customer data and being found in violation of privacy mandates.

For organizations seeking to see how Contrast masks sensitive data, developers can get started by trying the free, full-strength Contrast Community Edition. And for those who prefer a deeper drill down and a Contrast subject-matter expert to answer questions and walk through the Contrast DevOps-native AppSec Platform in detail, you can request a demo.

Get the latest content from Contrast directly to your mailbox. By subscribing, you will stay up to date with all the latest and greatest from Contrast.